The heartbeat+mon+coda solution

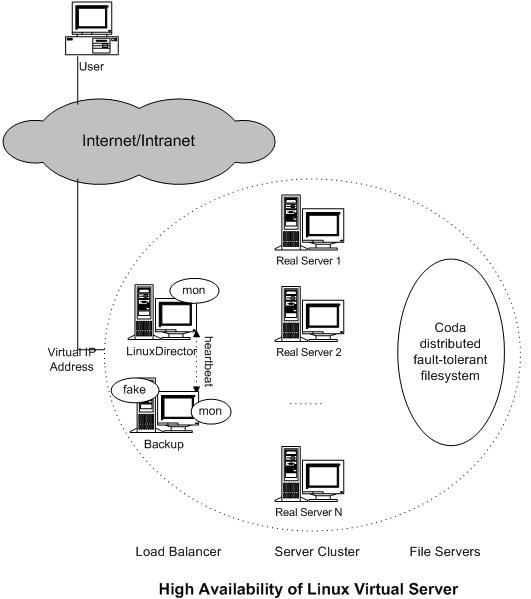

The high availability of virtual server can be provided by using of mon, heartbeat and coda software. The "mon" is a general-purpose resource monitoring system, which can be used to monitor network service availability and server nodes. The "heartbeat" code currently provides the heartbeats among two node computers through serial line and UDP heartbeats. Fake is IP take-over software by using of ARP spoofing. The architecture of this solution is illustrated in the following figure.

The server failover is handle as follows: The "mon" daemon is running on the load balancer to monitor service daemons and server nodes in the cluster. The fping.monitor is configured to detect whether the server nodes is alive every t seconds, and the relative service monitor is also configured to detect the service daemons on all the nodes every m seconds. For example, http.monitor can be used to check the http services; ftp.monitor is for the ftp services, and so on. An alert was written to remove/add a rule in the linux virtual server table while detecting the server node or daemon is down/up. Therefore, the load balancer can automatically mask service daemons or servers failure and put them into service when they are back.

Now, the load balancer becomes a single failure point of the whole system. In order to mask the failure of the primary load balancer, we need setup a backup server of the load balancer. The "fake" software is used for the backup to takeover the IP addresses of the load balancer when the load balancer fails, and the "heartbeat" code is used to detect the status of the load balancer to activate/deactivate the "fake" on the backup server. Two heartbeat daemons run on the primary and the backup, they heartbeat the message like "I'm alive" each other through the serial line periodically. When the heartcode daemon of the backup cannot hear the "I'm alive" message from the primary in the defined time, it activates the fake to take over the Virtual IP address to provide the load-balancing service; when it receives the "I'm alive" message from the primary later, it deactivate the fake to release the Virtual IP address, and the primary comes back to work again.

However, the failover or the takeover of the primary load balancer will cause the established connection in the hash table lost in the current implementation, which will require the clients to send their requests again.

Coda is a fault-tolerant distributed file systems, a descendant of Andrew file system. The contents of servers can be stored in Coda, so that files can be highly available and easy to manage.

Configuation example

The following is an example to setup a highly available virtual web server via direct routing.The failover of real servers

The "mon" is used to monitor service daemons and server nodes in the cluster. For example, the fping.monitor can be used to monitor the server nodes, http.monitor can be used to check the http services, ftp.monitor is for the ftp services, and so on. So, we just need to write an alert to remove/add a rule in the virtual server table while detecting the server node or daemon is down/up. Here is an example calleded lvs.alert, which takes virtual service(IP:Port) and the service port of real servers as parameters.

#!/usr/bin/perl

#

# lvs.alert - Linux Virtual Server alert for mon

#

# It can be activated by mon to remove a real server when the

# service is down, or add the server when the service is up.

#

#

use Getopt::Std;

getopts ("s:g:h:t:l:P:V:R:W:F:u");

$ipvsadm = "/sbin/ipvsadm";

$protocol = $opt_P;

$virtual_service = $opt_V;

$remote = $opt_R;

if ($opt_u) {

$weight = $opt_W;

if ($opt_F eq "nat") {

$forwarding = "-m";

} elsif ($opt_F eq "tun") {

$forwarding = "-i";

} else {

$forwarding = "-g";

}

if ($protocol eq "tcp") {

system("$ipvsadm -a -t $virtual_service -r $remote -w $weight $forwarding");

} else {

system("$ipvsadm -a -u $virtual_service -r $remote -w $weight $forwarding");

}

} else {

if ($protocol eq "tcp") {

system("$ipvsadm -d -t $virtual_service -r $remote");

} else {

system("$ipvsadm -d -u $virtual_service -r $remote");

}

};

The lvs.alert is put under the /usr/lib/mon/alert.d directory. The mon configuration file (/etc/mon/mon.cf or /etc/mon.cf) can be configured to monitor the http services and servers in the cluster as follows.

#

# The mon.cf file

#

#

# global options

#

cfbasedir = /etc/mon

alertdir = /usr/lib/mon/alert.d

mondir = /usr/lib/mon/mon.d

maxprocs = 20

histlength = 100

randstart = 30s

#

# group definitions (hostnames or IP addresses)

#

hostgroup www1 www1.domain.com

hostgroup www2 www2.domain.com

#

# Web server 1

#

watch www1

service http

interval 10s

monitor http.monitor

period wd {Sun-Sat}

alert mail.alert wensong

upalert mail.alert wensong

alert lvs.alert -P tcp -V 10.0.0.3:80 -R 192.168.0.1 -W 5 -F dr

upalert lvs.alert -P tcp -V 10.0.0.3:80 -R 192.168.0.1 -W 5 -F dr

#

# Web server 2

#

watch www2

service http

interval 10s

monitor http.monitor

period wd {Sun-Sat}

alert mail.alert wensong

upalert mail.alert wensong

alert lvs.alert -P tcp -V 10.0.0.3:80 -R 192.168.0.2 -W 5 -F dr

upalert lvs.alert -P tcp -V 10.0.0.3:80 -R 192.168.0.2 -W 5 -F dr

Note that we need to set the paramter of lvs.alert like "lvs.alert -V 10.0.0.3:80 -R 192.168.0.3:8080" if the destination port is different in LVS/NAT.

Now the load balancer can automatically mask service daemons or servers failure and put them into service when they are back.

The failover of the load balancer

In order to prevent the load balancer becoming a single failure point of the whole system, we need setup a backup of the load balancer and let them heartbeat each other periodically. Please read the GettingStarted document include the heartbeat package, it is simple to setup 2-node heartbeating system.

For an example, we assume that the two load balancers have the following addresses:

| lvs1.domain.com (primary) | 10.0.0.1 |

| lvs2.domain.com (backup) | 10.0.0.2 |

| www.domain.com (VIP) | 10.0.0.3 |

After install the heartbeat on both lvs1.domain.com and lvs2.domain.com, simply create the /etc/ha.d/ha.conf as follows:

# # keepalive: how many seconds between heartbeats # keepalive 2 # # deadtime: seconds-to-declare-host-dead # deadtime 10 # hopfudge maximum hop count minus number of nodes in config hopfudge 1 # # What UDP port to use for udp or ppp-udp communication? # udpport 1001 # What interfaces to heartbeat over? udp eth0 # # Facility to use for syslog()/logger (alternative to log/debugfile) # logfacility local0 # # Tell what machines are in the cluster # node nodename ... -- must match uname -n node lvs1.domain.com node lvs2.domain.com

The /etc/ha.d/haresources file is as follows:

lvs1.domain.com 10.0.0.3 lvs mon

The /etc/rc.d/init.d/lvs is as follows:

#!/bin/sh

#

# You probably want to set the path to include

# nothing but local filesystems.

#

PATH=/bin:/usr/bin:/sbin:/usr/sbin

export PATH

IPVSADM=/sbin/ipvsadm

case "$1" in

start)

if [ -x $IPVSADM ]

then

echo 1 > /proc/sys/net/ipv4/ip_forward

$IPVSADM -A -t 10.0.0.3:80

$IPVSADM -a -t 10.0.0.3:80 -r 192.168.0.1 -w 5 -g

$IPVSADM -a -t 10.0.0.3:80 -r 192.168.0.2 -w 5 -g

fi

;;

stop)

if [ -x $IPVSADM ]

then

$IPVSADM -C

fi

;;

*)

echo "Usage: lvs {start|stop}"

exit 1

esac

exit 0

Finally, make sure that all the files are created on both the lvs1 and lvs2 nodes and alter them for own own environment, then start the heartbeat daemon on the two nodes.

Note that fake (IP takeover by Gratuitous Arp) is already included in the heartbeat package, so there is no need to setup "fake" separately. When the lvs1.domain.com node fails, the lvs2.domain.com will take over all the haresources of the lvs1.domain.com, i.e. taking over the 10.0.0.3 address by Gratuitous ARP, start the /etc/rc.d/init.d/lvs and /etc/rc.d/init.d/mon scripts. When the lvs1.domain.com come back, the lvs2 releases the HA resources and the lvs1 takes them back.